Note: This is a reprint of a posting I made for my company, NetWitness (www.netwitness.com). This is unchanged from the original, however that copy can be found at: http://www.networkforensics.com/2010/11/25/identifying-the-country-of-origin-for-a-malware-pe-executable/

11/16/2009 6:41:48 PM –> Hook instalate lsass.exe

We can use Google Translate’s “language detect” feature to help up determine the language used:

Of course, it’s not often we get THAT lucky!

A more interesting method is the examination of certain structures known as the Resource Directory within the executable file itself. For the purpose of this post, I will not be describing the Resource Directory structure. It’s a complicated beast, making it a topic I will save for later posts that actually warrant and/or require a low-level understanding of it. Suffice it to say, the Resource Directory is where embedded resources like bitmaps (used in GUI graphics), file icons, etc. are stored. The structure is frequently compared to the layout of files on a file system, although I think it’s insulting to file systems to say such a thing. For those more graphically inclined, I took the following image from http://www.devsource.com/images/stories/PEFigure2.jpg.

For the sake of example, here’s some images showing you just a few of the resources embedded inside of notepad.exe: (using CFF Explorer from: http://www.ntcore.com/exsuite.php)

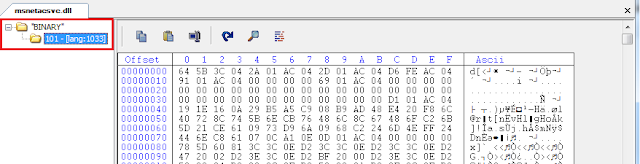

Now it’s important to note that an executable may have only a few or even zero resources – especially in the case of malware. Consider the following example showing a recent piece of malware with only a single resource called “BINARY.”

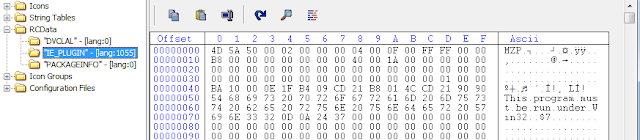

Moving on, let’s look at another piece of malware… Below, we see this piece of malware has five resource directories.

We could pick any of the five for this analysis, but I’ll pick RCData – mostly because it’s typically an interesting directory to examine when reverse engineering malware. (This is because RCData defines a raw data resource for an application. Raw data resources permit the inclusion of any binary data directly in the executable file.) Under RCData, we see three separate entries:

The first one to catch my eye is the one called IE_PLUGIN. I’ll show a screenshot of it below, but am saving the subject of executables embedded within executables for a MUCH more technical post in the near future (when it’s not 1:30 am and I actually feel like writing more!).

Going back to the entry structure itself, the IE_PLUGIN entry will have at least one Directory Entry underneath it to describe the size(s) and offset(s) to the data contained within that resource. I have expanded it as shown next:

And that’s where things get interesting – as it relates to answering the question at the start of this post anyways. Notice the ID: 1055. That’s our money shot for helping to determine what country this binary was compiled in. Or, more specifically, the default locale codepage of the computer used to compile this binary. Those ID’s have very legitimate uses, for example, you can have the same dialog in English, French and German localized forms. The system will choose the dialog to load based on the thread’s locale. However, when resources are added to the binary without explicitly setting them to different locale IDs, those resources will be assigned the default locale ID of the compiler’s computer.

So in the example above, what does 1055 mean?

It means this piece of malware likely was developed (or at least compiled in) Turkey.

How do we know that one resource wasn’t added with a custom ID? Because we see the same ID when looking at almost all the other resources in the file (anything with an ID of zero just means “use the default locale”):

In this case, we are also lucky enough to have other strings in the binary (once unpacked) to help solidify the assertion this binary is from Turkey. One such string is “Aktif Pencere,” which Google’s Translation detection engine shows as:

However, as you can see, this technique is very useful even when no strings are present – in logs or the binary itself.

So is this how the default binary locale identification works normally (eg: non-malware executable files)?

Not exactly. The above techniques are generally used with malware (if the malware even has exposed resources), but not generally with normal/legitimate binaries. Consider the following legitimate binary. What is the source locale for the following example?

As you see in the green box, we have some cursor resources with the ID for the United States. (I’m including a lookup table at the bottom of this post.) In the orange box, there are additional cursor resources with the ID for Germany. In the red box is RCData, like we examined before, but all of these resources have the ID specifying the default language of the computer executing the application.

As it turns out, the normal value to examine is the ID for the Version Information Table resource (in the blue box). In the case above, it's the Czech Republic. The Version Information Table contains the “metadata” you normally see depicted in locations like this:

In the above screenshot, Windows is identifying the source/target local as English, and specifically, United States English (as opposed to UK English, Australian English, etc…). That information is not stored within the Version Information table, but rather is determined by the ID of the Version Information Table.

However, in malware, the Version Information table is almost always stripped or mangled, as is the case with our original example from earlier:

Because of that, the earlier techniques are more applicable to malware.

Below, I’m including a table to help you translate Resource Entry IDs to locales (sorted by decimal ID number).

| Locale | Language | LCID | Decimal | Codepage |

| Arabic – Saudi Arabia | ar | ar-sa | 1025 | 1256 |

| Bulgarian | bg | bg | 1026 | 1251 |

| Catalan | ca | ca | 1027 | 1252 |

| Chinese – Taiwan | zh | zh-tw | 1028 | |

| Czech | cs | cs | 1029 | 1250 |

| Danish | da | da | 1030 | 1252 |

| German – Germany | de | de-de | 1031 | 1252 |

| Greek | el | el | 1032 | 1253 |

| English – United States | en | en-us | 1033 | 1252 |

| Spanish – Spain (Traditional) | es | es-es | 1034 | 1252 |

| Finnish | fi | fi | 1035 | 1252 |

| French – France | fr | fr-fr | 1036 | 1252 |

| Hebrew | he | he | 1037 | 1255 |

| Hungarian | hu | hu | 1038 | 1250 |

| Icelandic | is | is | 1039 | 1252 |

| Italian – Italy | it | it-it | 1040 | 1252 |

| Japanese | ja | ja | 1041 | |

| Korean | ko | ko | 1042 | |

| Dutch – Netherlands | nl | nl-nl | 1043 | 1252 |

| Norwegian – Bokml | nb | no-no | 1044 | 1252 |

| Polish | pl | pl | 1045 | 1250 |

| Portuguese – Brazil | pt | pt-br | 1046 | 1252 |

| Raeto-Romance | rm | rm | 1047 | |

| Romanian – Romania | ro | ro | 1048 | 1250 |

| Russian | ru | ru | 1049 | 1251 |

| Croatian | hr | hr | 1050 | 1250 |

| Slovak | sk | sk | 1051 | 1250 |

| Albanian | sq | sq | 1052 | 1250 |

| Swedish – Sweden | sv | sv-se | 1053 | 1252 |

| Thai | th | th | 1054 | |

| Turkish | tr | tr | 1055 | 1254 |

| Urdu | ur | ur | 1056 | 1256 |

| Indonesian | id | id | 1057 | 1252 |

| Ukrainian | uk | uk | 1058 | 1251 |

| Belarusian | be | be | 1059 | 1251 |

| Slovenian | sl | sl | 1060 | 1250 |

| Estonian | et | et | 1061 | 1257 |

| Latvian | lv | lv | 1062 | 1257 |

| Lithuanian | lt | lt | 1063 | 1257 |

| Tajik | tg | tg | 1064 | |

| Farsi – Persian | fa | fa | 1065 | 1256 |

| Vietnamese | vi | vi | 1066 | 1258 |

| Armenian | hy | hy | 1067 | |

| Azeri – Latin | az | az-az | 1068 | 1254 |

| Basque | eu | eu | 1069 | 1252 |

| Sorbian | sb | sb | 1070 | |

| FYRO Macedonia | mk | mk | 1071 | 1251 |

| Sesotho (Sutu) | 1072 | |||

| Tsonga | ts | ts | 1073 | |

| Setsuana | tn | tn | 1074 | |

| Venda | 1075 | |||

| Xhosa | xh | xh | 1076 | |

| Zulu | zu | zu | 1077 | |

| Afrikaans | af | af | 1078 | 1252 |

| Georgian | ka | 1079 | ||

| Faroese | fo | fo | 1080 | 1252 |

| Hindi | hi | hi | 1081 | |

| Maltese | mt | mt | 1082 | |

| Sami Lappish | 1083 | |||

| Gaelic – Scotland | gd | gd | 1084 | |

| Yiddish | yi | yi | 1085 | |

| Malay – Malaysia | ms | ms-my | 1086 | 1252 |

| Kazakh | kk | kk | 1087 | 1251 |

| Kyrgyz – Cyrillic | 1088 | 1251 | ||

| Swahili | sw | sw | 1089 | 1252 |

| Turkmen | tk | tk | 1090 | |

| Uzbek – Latin | uz | uz-uz | 1091 | 1254 |

| Tatar | tt | tt | 1092 | 1251 |

| Bengali – India | bn | bn | 1093 | |

| Punjabi | pa | pa | 1094 | |

| Gujarati | gu | gu | 1095 | |

| Oriya | or | or | 1096 | |

| Tamil | ta | ta | 1097 | |

| Telugu | te | te | 1098 | |

| Kannada | kn | kn | 1099 | |

| Malayalam | ml | ml | 1100 | |

| Assamese | as | as | 1101 | |

| Marathi | mr | mr | 1102 | |

| Sanskrit | sa | sa | 1103 | |

| Mongolian | mn | mn | 1104 | 1251 |

| Tibetan | bo | bo | 1105 | |

| Welsh | cy | cy | 1106 | |

| Khmer | km | km | 1107 | |

| Lao | lo | lo | 1108 | |

| Burmese | my | my | 1109 | |

| Galician | gl | 1110 | 1252 | |

| Konkani | 1111 | |||

| Manipuri | 1112 | |||

| Sindhi | sd | sd | 1113 | |

| Syriac | 1114 | |||

| Sinhala; Sinhalese | si | si | 1115 | |

| Amharic | am | am | 1118 | |

| Kashmiri | ks | ks | 1120 | |

| Nepali | ne | ne | 1121 | |

| Frisian – Netherlands | 1122 | |||

| Filipino | 1124 | |||

| Divehi; Dhivehi; Maldivian | dv | dv | 1125 | |

| Edo | 1126 | |||

| Igbo – Nigeria | 1136 | |||

| Guarani – Paraguay | gn | gn | 1140 | |

| Latin | la | la | 1142 | |

| Somali | so | so | 1143 | |

| Maori | mi | mi | 1153 | |

| HID (Human Interface Device) | 1279 | |||

| Arabic – Iraq | ar | ar-iq | 2049 | 1256 |

| Chinese – China | zh | zh-cn | 2052 | |

| German – Switzerland | de | de-ch | 2055 | 1252 |

| English – Great Britain | en | en-gb | 2057 | 1252 |

| Spanish – Mexico | es | es-mx | 2058 | 1252 |

| French – Belgium | fr | fr-be | 2060 | 1252 |

| Italian – Switzerland | it | it-ch | 2064 | 1252 |

| Dutch – Belgium | nl | nl-be | 2067 | 1252 |

| Norwegian – Nynorsk | nn | no-no | 2068 | 1252 |

| Portuguese – Portugal | pt | pt-pt | 2070 | 1252 |

| Romanian – Moldova | ro | ro-mo | 2072 | |

| Russian – Moldova | ru | ru-mo | 2073 | |

| Serbian – Latin | sr | sr-sp | 2074 | 1250 |

| Swedish – Finland | sv | sv-fi | 2077 | 1252 |

| Azeri – Cyrillic | az | az-az | 2092 | 1251 |

| Gaelic – Ireland | gd | gd-ie | 2108 | |

| Malay – Brunei | ms | ms-bn | 2110 | 1252 |

| Uzbek – Cyrillic | uz | uz-uz | 2115 | 1251 |

| Bengali – Bangladesh | bn | bn | 2117 | |

| Mongolian | mn | mn | 2128 | |

| Arabic – Egypt | ar | ar-eg | 3073 | 1256 |

| Chinese – Hong Kong SAR | zh | zh-hk | 3076 | |

| German – Austria | de | de-at | 3079 | 1252 |

| English – Australia | en | en-au | 3081 | 1252 |

| French – Canada | fr | fr-ca | 3084 | 1252 |

| Serbian – Cyrillic | sr | sr-sp | 3098 | 1251 |

| Arabic – Libya | ar | ar-ly | 4097 | 1256 |

| Chinese – Singapore | zh | zh-sg | 4100 | |

| German – Luxembourg | de | de-lu | 4103 | 1252 |

| English – Canada | en | en-ca | 4105 | 1252 |

| Spanish – Guatemala | es | es-gt | 4106 | 1252 |

| French – Switzerland | fr | fr-ch | 4108 | 1252 |

| Arabic – Algeria | ar | ar-dz | 5121 | 1256 |

| Chinese – Macau SAR | zh | zh-mo | 5124 | |

| German – Liechtenstein | de | de-li | 5127 | 1252 |

| English – New Zealand | en | en-nz | 5129 | 1252 |

| Spanish – Costa Rica | es | es-cr | 5130 | 1252 |

| French – Luxembourg | fr | fr-lu | 5132 | 1252 |

| Bosnian | bs | bs | 5146 | |

| Arabic – Morocco | ar | ar-ma | 6145 | 1256 |

| English – Ireland | en | en-ie | 6153 | 1252 |

| Spanish – Panama | es | es-pa | 6154 | 1252 |

| French – Monaco | fr | 6156 | 1252 | |

| Arabic – Tunisia | ar | ar-tn | 7169 | 1256 |

| English – Southern Africa | en | en-za | 7177 | 1252 |

| Spanish – Dominican Republic | es | es-do | 7178 | 1252 |

| French – West Indies | fr | 7180 | ||

| Arabic – Oman | ar | ar-om | 8193 | 1256 |

| English – Jamaica | en | en-jm | 8201 | 1252 |

| Spanish – Venezuela | es | es-ve | 8202 | 1252 |

| Arabic – Yemen | ar | ar-ye | 9217 | 1256 |

| English – Caribbean | en | en-cb | 9225 | 1252 |

| Spanish – Colombia | es | es-co | 9226 | 1252 |

| French – Congo | fr | 9228 | ||

| Arabic – Syria | ar | ar-sy | 10241 | 1256 |

| English – Belize | en | en-bz | 10249 | 1252 |

| Spanish – Peru | es | es-pe | 10250 | 1252 |

| French – Senegal | fr | 10252 | ||

| Arabic – Jordan | ar | ar-jo | 11265 | 1256 |

| English – Trinidad | en | en-tt | 11273 | 1252 |

| Spanish – Argentina | es | es-ar | 11274 | 1252 |

| French – Cameroon | fr | 11276 | ||

| Arabic – Lebanon | ar | ar-lb | 12289 | 1256 |

| English – Zimbabwe | en | 12297 | 1252 | |

| Spanish – Ecuador | es | es-ec | 12298 | 1252 |

| French – Cote d’Ivoire | fr | 12300 | ||

| Arabic – Kuwait | ar | ar-kw | 13313 | 1256 |

| English – Phillippines | en | en-ph | 13321 | 1252 |

| Spanish – Chile | es | es-cl | 13322 | 1252 |

| French – Mali | fr | 13324 | ||

| Arabic – United Arab Emirates | ar | ar-ae | 14337 | 1256 |

| Spanish – Uruguay | es | es-uy | 14346 | 1252 |

| French – Morocco | fr | 14348 | ||

| Arabic – Bahrain | ar | ar-bh | 15361 | 1256 |

| Spanish – Paraguay | es | es-py | 15370 | 1252 |

| Arabic – Qatar | ar | ar-qa | 16385 | 1256 |

| English – India | en | en-in | 16393 | |

| Spanish – Bolivia | es | es-bo | 16394 | 1252 |

| Spanish – El Salvador | es | es-sv | 17418 | 1252 |

| Spanish – Honduras | es | es-hn | 18442 | 1252 |

| Spanish – Nicaragua | es | es-ni | 19466 | 1252 |

| Spanish – Puerto Rico | es | es-pr | 20490 | 1252 |